Introduction

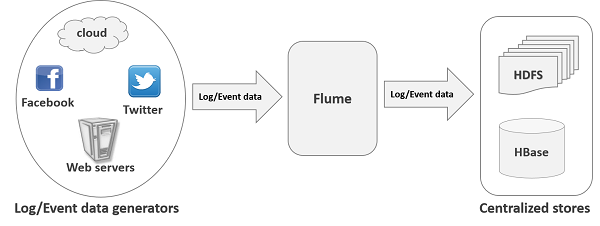

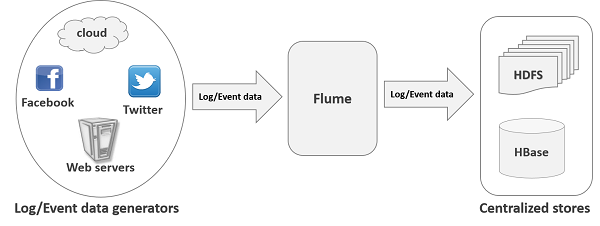

Apache Flume is a tool/service/data ingestion mechanism for collecting aggregating and transporting large amounts of streaming data such as log files, events (etc...) from various sources to a centralized data store.

Flume is a highly reliable, distributed, and configurable tool. It is principally designed to copy streaming data (log data) from various web servers to HDFS..

Where does flume fit and does not fit?

Apache Flume is a tool/service/data ingestion mechanism for collecting aggregating and transporting large amounts of streaming data such as log files, events (etc...) from various sources to a centralized data store.

Flume is a highly reliable, distributed, and configurable tool. It is principally designed to copy streaming data (log data) from various web servers to HDFS..

Where does flume fit and does not fit?

- Flume is designed to transport and ingest regularly-generated event data

- Need to ingest textual log data into Hadoop/HDFS then Flume is the right fit for your problem, full stop.

- If your data is not regularly generated (i.e. you are trying to do a single bulk load of data into a Hadoop cluster) then Flume will still work, but it is probably overkill for your situation.

Apache Flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store.

A Flume agent is a (JVM) process that hosts the components through which events flow from an external source to the next destination

Flume Dataflow:

Terminologies :

- Source- consumes events delivered to it by an external source like a web server.

- Channel- The channel is a passive store that keeps the event until it’s consumed by a Flume sink.

- Sink- The sink removes the event from the channel and puts it into an external repository like HDFS

Command:

- flume-ng version – To know the version of flume

Creating a Flume agent

- To define the flow within a single agent, you need to link the sources and sinks via a channel.

- Need to list the sources, sinks and channels for the given agent, and then point the source and sink to a channel.

- A source instance can specify multiple channels, but a sink instance can only specify one channel.

Defining a Flow:

An agent named agent_foo is reading data from an external avro client and sending it to HDFS via a memory channel.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.