In this post, I will explain how to setup Multi-Node Hadoop cluster on a distributed environment.

We will consider 5 servers here,

They are:

Hadoop Master: 192.168.150.205 (Hadoop-Master)

Hadoop Secondary namenode: 192.168.150.206(hadoop-secondary-Namenode)

Hadoop DataNode1: 192.168.150.207(Hadoop-DataNode1)

Hadoop DataNode2: 192.168.150.208(Hadoop-DataNode2)

Hadoop DataNode3: 192.168.150.209 (Hadoop-DataNode3)

Follow the steps given below to have Hadoop Multi-Node cluster setup.

I suggest, you to read the below post regarding single node hadoop cluster before proceeding with this one:

-------

http://www.maninmanoj.com/2015/03/setting-up-single-node-hadoop-cluster.html

-------

Pre-requisites are same as single node hadoop cluster.

Prerequisite 1: Check whether java is installed in all servers of Hadoop cluster. Else, install it via the link below:

----

http://www.maninmanoj.com/2015/03/installing-java-7-jdk-7u75-on-linux.html

----

Prerequisite 2 : Passwordless login has to be enabled within HADOOP-MASTER. Also, from Hadoop-Master to Secondary Namenode and Datanode.

To setup ssh key authentication follow the link below:

--------

http://www.maninmanoj.com/2013/08/how-to-perform-ssh-login-without.html

---------

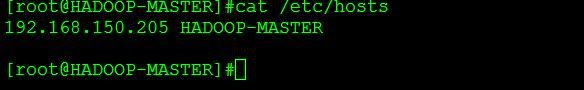

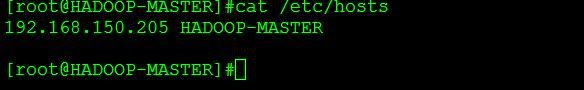

Prerequsite 3: "/etc/hosts" file has been edited in following format.

BELOW CONFIGURATIONS NEED TO BE DONE ON ALL NODES.

--------------------

Let us start hadoop installation if prerequisites are met.

Step 1: Download desired hadoop package from the link below:

-----

http://apache.claz.org/hadoop/common/

----

cd /usr/local/

wget http://apache.claz.org/hadoop/common/hadoop-1.2.1/hadoop-1.2.1-bin.tar.gz

----

Now, rename the extracted folder as below:

----

mv hadoop-1.2.1 hadoop

-----

Once done, edit ~/.bashrc and enter the below variables:

-----

export HADOOP_PREFIX=/usr/local/hadoop

export PATH=$PATH:$HADOOP_PREFIX/bin

-----

Then run the below command to make it effective.

-----

exec bash

-----

Step 2:

------

Now, we need to set environment variables in the following file:

vi /usr/local/hadoop/conf/hadoop-env.sh

Edit the below variable with java path installed.

------

export JAVA_HOME=/opt/jdk1.7.0_75/

------

Step 3:

------

vi /usr/local/hadoop/conf/core-site.xml

Enter the below parameters inside "configuration" that is already present:

---------

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://192.168.150.205:10001</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

</configuration>

--------------

Step 4:

---------

vi /usr/local/hadoop/conf/mapred-site.xml

----------

Enter the below entries :

----------

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>192.168.150.205:10002</value>

</property>

</configuration>

-----------

Step 5:

---------

In the "masters" file present in "/usr/local/hadoop/conf/masters" enter the IP address of "SECONDARY NAMENODE".

Step 6:

--------

In the "slaves" file present in "/usr/local/hadoop/conf/slaves" enter the IP addresses of all DATA-NODES.

--------

Step 7:

--------

Now, run the below command on NAMENODE to format HDFS filesystem.

--------

hadoop namenode -format

---------

Procedure to start the cluster:

Step 1: Start dfs using the command "start-dfs.sh".

Step 2: Start mapred using the command "start-mapred.sh".

We will consider 5 servers here,

They are:

Hadoop Master: 192.168.150.205 (Hadoop-Master)

Hadoop Secondary namenode: 192.168.150.206(hadoop-secondary-Namenode)

Hadoop DataNode1: 192.168.150.207(Hadoop-DataNode1)

Hadoop DataNode2: 192.168.150.208(Hadoop-DataNode2)

Hadoop DataNode3: 192.168.150.209 (Hadoop-DataNode3)

Follow the steps given below to have Hadoop Multi-Node cluster setup.

I suggest, you to read the below post regarding single node hadoop cluster before proceeding with this one:

-------

http://www.maninmanoj.com/2015/03/setting-up-single-node-hadoop-cluster.html

-------

Pre-requisites are same as single node hadoop cluster.

Prerequisite 1: Check whether java is installed in all servers of Hadoop cluster. Else, install it via the link below:

----

http://www.maninmanoj.com/2015/03/installing-java-7-jdk-7u75-on-linux.html

----

Prerequisite 2 : Passwordless login has to be enabled within HADOOP-MASTER. Also, from Hadoop-Master to Secondary Namenode and Datanode.

To setup ssh key authentication follow the link below:

--------

http://www.maninmanoj.com/2013/08/how-to-perform-ssh-login-without.html

---------

Prerequsite 3: "/etc/hosts" file has been edited in following format.

BELOW CONFIGURATIONS NEED TO BE DONE ON ALL NODES.

--------------------

Let us start hadoop installation if prerequisites are met.

Step 1: Download desired hadoop package from the link below:

-----

http://apache.claz.org/hadoop/common/

----

cd /usr/local/

wget http://apache.claz.org/hadoop/common/hadoop-1.2.1/hadoop-1.2.1-bin.tar.gz

----

Now, rename the extracted folder as below:

----

mv hadoop-1.2.1 hadoop

-----

Once done, edit ~/.bashrc and enter the below variables:

-----

export HADOOP_PREFIX=/usr/local/hadoop

export PATH=$PATH:$HADOOP_PREFIX/bin

-----

Then run the below command to make it effective.

-----

exec bash

-----

Step 2:

------

Now, we need to set environment variables in the following file:

vi /usr/local/hadoop/conf/hadoop-env.sh

Edit the below variable with java path installed.

------

export JAVA_HOME=/opt/jdk1.7.0_75/

------

Step 3:

------

vi /usr/local/hadoop/conf/core-site.xml

Enter the below parameters inside "configuration" that is already present:

---------

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://192.168.150.205:10001</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

</configuration>

--------------

Step 4:

---------

vi /usr/local/hadoop/conf/mapred-site.xml

----------

Enter the below entries :

----------

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>192.168.150.205:10002</value>

</property>

</configuration>

-----------

Step 5:

---------

In the "masters" file present in "/usr/local/hadoop/conf/masters" enter the IP address of "SECONDARY NAMENODE".

Step 6:

--------

In the "slaves" file present in "/usr/local/hadoop/conf/slaves" enter the IP addresses of all DATA-NODES.

--------

Step 7:

--------

Now, run the below command on NAMENODE to format HDFS filesystem.

--------

hadoop namenode -format

---------

Procedure to start the cluster:

Step 1: Start dfs using the command "start-dfs.sh".

Step 2: Start mapred using the command "start-mapred.sh".

Now, to see the details of namenode use the below URL:

-------

http://192.168.150.205:50070/ ---> Details of Name Node.

------

Kool :)

No comments:

Post a Comment

Note: only a member of this blog may post a comment.